Faculty

Department Faculty

Our faculty are renowned leaders in their respective fields, extraordinary teachers, and dedicated mentors.

Home to world-class teaching and interdisciplinary research, the Department of Electrical and Computer Engineering prides itself on immersive education, creative problem-solving, and close mentorship, with emphasis on devices, system engineering, signal processing and machine learning.

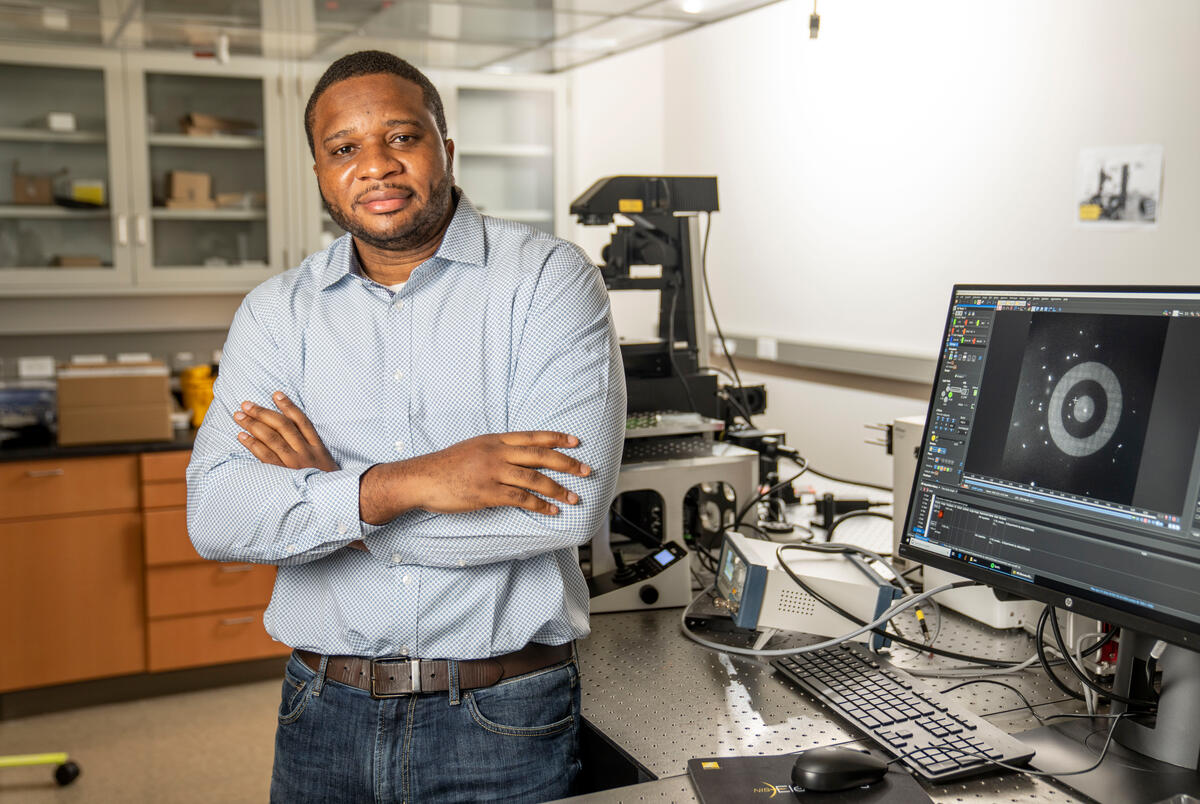

Vanderbilt researchers have developed a way to more quickly, and precisely, trap nanoscale objects such as potentially cancerous extracellular vesicles using a cutting-edge plasmonic nanotweezer.

A shared passion for research and education is a hallmark of the Vanderbilt environment, and our undergraduate and graduate programs exemplify that drive for excellence. Faculty, research engineers and students engage in leading scholarship and significant research in both industry and government.

A shared passion for research and education is a hallmark of the Vanderbilt environment, and our undergraduate and graduate programs exemplify that drive for excellence. Faculty, research engineers and students engage in leading scholarship and significant research in both industry and government.

Electrical and computer engineering graduate students pursuing M.S. and Ph.D. degrees work with our accomplished faculty on high-impact research projects while gaining experience in specialized areas of interest. Focus areas include carbon, diamond and silicon nanotechnology, hybrid and embedded systems, medical image processing, photonics, radiation effects and reliability and robotics.

We offer an ABET-accredited electrical and computer engineering undergraduate degree and a double major in electrical & computer engineering and biomedical engineering, as well as several minors.

The immersive Senior Design Project brings together interdisciplinary teams of engineers to solve cutting-edge problems. Prior teams have gone on to start companies, lead to new industry products, and kickstart research careers. Summer research opportunities are also available in collaboration with the School of Engineering and the NSF Research Experience for Undergraduates (REU) programs.

Bennett Landman, Chair

Tim Holman, Director of Undergraduate Studies

Alan Peters, Director of Graduate Studies

Jack Noble, Director of Graduate Recruiting

Department Administration Staff

Department of Electrical and Computer Engineering

Vanderbilt University

PMB 351824

2301 Vanderbilt Place

Nashville, TN 37235-1824